A Brief History of Firewall

We can tell how successful an invention is by how hard it is to figure out who really came up with it. This happened with modern calculus, which was a battle between Isaac Newton and Gottfried Leibniz, and also with Charles Darwin and Alfred Wallace when they thought up the theory of evolution.

Basically, in a lot of cases, everyone working in a certain field starts moving in the same direction, sometimes all at the same time. That’s what seems to have happened with firewalls.

The tech that connects networks hit a roadblock: “We’re trying really hard to get different devices talking to each other, but there’s someone on the other end of that network cable I don’t want snooping around my systems.”

The first step toward solving this problem popped up in Cisco routers. In 1985, they got this cool feature called “Access Control Lists” (ACL), which were basically lists of network addresses that routers should block traffic from, based on stuff like where the communication was coming from and going to.

Since routers were in a prime spot within the network setup, admins liked the ACLs, but there were a couple of problems: first, the rules were super basic and didn’t have many options; and second, when the lists got too long, the devices started to have performance issues. Upgrading these routers would cost a pretty penny.

So, in the late ’80s, two things happened:

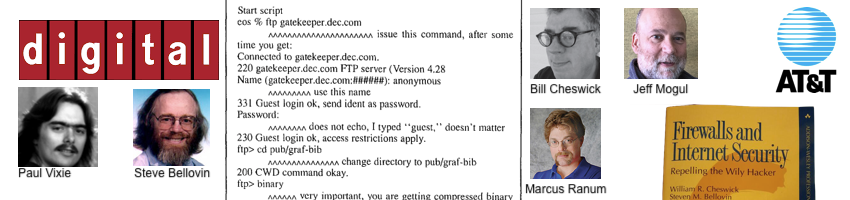

The lab at Digital Equipment Corporation (the same folks who created AltaVista in 1994) published a paper about the system used at the gatekeeper.dec.com address. This system was in charge of managing access to and from the corporate network. Paul Vixie was one of the designers, and he put together a server using spare parts lying around the lab.

The DEC gateway was just a computer with two network cards. Users had an access account on this machine, and they had to connect to it before they could get to the resources on the other side.

Meanwhile, at AT&T labs, Dave Presotto and Howard Trickey were building a system that sat between two networks, just like the DEC gateway. But theirs worked as a proxy, receiving and forwarding connections in both directions after checking a list of allowed access types.

William Cheswick and Steven Bellovin joined the project and published a bunch of papers that led to the 1994 book “Firewalls and Internet Security: Repelling the Wily Hacker.” This book laid the foundation for the concept of firewalls that we know today.

“Firewalls are barriers between ‘us’ and ‘them’ for arbitrary values of ‘them’” – Steve Bellovin

In the early ’90s, Marcus Ranun, who was working with Fred Avolio at DEC, was asked to set up a system similar to the Gatekeeper for their unit. His approach was to get rid of user accounts, thinking that 99% of issues were related to access accounts on the system. So, he figured that a system without local users would be safer.

Also at DEC, Jeff Mogul was working on screend, a piece of software that analyzed a connection stream and decided, based on an access list, if it should be allowed or blocked. It was kinda like ACLs in routers, but with way more freedom in defining the types of access to be controlled.

A week and 10,000 lines of code later, Ranun unveiled Gatekeeper V2, which combined features from the first version with AT&T’s proxy concepts and Cisco’s ACLs. This system was the first-ever firewall to be sold by a company. It was called Screening External Access Link, or SEAL (which also means to seal or close up) and was licensed to Dupont in 1991 for $75,000 (plus installation fees).

PDF: Introduction guide to SEAL

At that point, the firewall systems market was wide open. Raptor Eagle, founded by David Pensak – a Dupont employee when SEAL was deployed – released their firewall six months later, followed closely by ANS InterLock.

During this time, Avolio and Ranun left DEC and joined TIS (Trusted Information Systems), a company founded in 1983 by Steve Walker, a former NSA employee. About a month later, DARPA funded the creation of a modular proxy system to build the firewall protecting the whitehouse.gov site. The first White House email server was hosted at TIS.

The result of all this research was the birth, in 1993, of the Firewall Toolkit. This project aimed to improve the quality of firewalls in use by creating a set of tools that could be used to build other systems. Version 2 of this toolkit became the foundation for the Gauntlet firewall.

In 1993, the Gauntlet firewall fit on 2 floppy disks and cost $25,000.

The Gauntlet had a text-based configuration interface and instruction manuals – a big deal back then – but it was still pretty clunky and complicated to manage.

A new firewall concept had been in the works since 1992, thanks to Bob Braden and Annette DeSchon at the University of Southern California. Called Visas, this architecture introduced two innovations. The first was a graphical admin interface. It could be used to configure the firewall from Windows and Mac workstations, with all the colorful icons and mouse clicks these platforms allowed.

The second innovation was the concept of stateful firewall dynamic packet filtering. This method was also researched at AT&T by Presotto and his team (including Cheswick and Bellovin), but its use was limited to internal systems.

Before dynamic filters, a firewall had to be super specific about rules allowing packets to pass in a certain direction (like from inside the network to the internet) and rules for letting response packets back in. This meant having to create a bunch of firewall rules, which over time, made things confusing and inefficient.

Another issue was that with a static set of rules for handling network traffic, someone could send packets into the protected network by making them look like responses to legitimate requests. Kinda like letting a car drive the wrong way down a one-way street, as long as it’s in reverse.

Dynamic rule control simplified firewall administration and boosted security. With this feature, you only needed to create the outbound rule. When a request triggered this rule, super specific rules would automatically be created to allow response packets for that request to enter.

These features were implemented in a new system in 1994 by Israeli developer Nir Zuk. After a few unsuccessful attempts to find representatives in the US (Steve Walker at TIS turned down the idea), Zuk founded CheckPoint. He and his team were the first to build the generation of firewalls that really took off commercially.

CheckPoint was also the first to sell the system as an appliance, providing a closed box to connect to the network and manage from your graphical workstation. It was given the fancy name Firewall-1.

A feature that network admins really appreciated also emerged: the ability to “open up” communication packets and analyze them for clues on how the firewall should behave. A good example of its usefulness is packet filtering for FTP connections.

An FTP connection works through two channels. The first one handles communication control, like commands to list folders and start downloading files. The second channel is used only for data transmission, including the requested file content.

The big complication here is that the data connection is initiated in the opposite direction of the control connection. That is, a user inside a network protected by a firewall starts their FTP connection on port 21 of a server on the internet. When a file is requested, the server initiates a connection on the user’s computer to deliver its content. And the port used for this connection is random, dynamically chosen, and agreed upon between the user and server through the control connection.

However, a firewall configured to protect an internal network would never allow an incoming connection request. The assumption was that only connections initiated from inside the network were authorized to receive response packets from the outside. The data connection was blocked precisely because it was made from the outside in.

In the first half of the 90s, the expansion of deep packet inspection capabilities began. This technique allowed the firewall to “read” and “understand” the packets that crossed it, giving it the opportunity to adapt its own operation.

With this insight, it was possible, for example, to analyze the communication of an FTP control channel and extract the port number agreed upon between the user and server for establishing the data channel from within the packets. This way, the firewall could anticipate and create a specific allow rule for that connection, which would be established from the outside in.

This technique brought additional security to various protocols, especially when it started to be used to check the integrity of communication, evaluating if data packets really belonged to the connection they claimed to be part of and if they were within the standards established by the protocol they claimed to represent.

The careful evaluation and manipulation of packets’ internal data had already been proposed as a concept in 1986 by Dorothy Denning and led to a new segment of solutions called Intrusion Detection Systems (IDS), but few commercial solutions emerged until the second half of the 90s.

IDSs were small alert-generating machines connected to a switch where they could passively monitor all network traffic. The technique consisted of feeding the system with a “signature database” or “behavior patterns” that described content or actions usually observed only in systems under attack.

Solutions of this type had an increasing adoption rate between 1997 and 2002, the year when Gartner suggested their replacement with solutions that were less reactive and more proactive.

By combining IDS and packet filtering functions, firewalls took on another role: intrusion protection (Intrusion Protection Systems, or IPS)

It then became possible to allow communication through a certain protocol, like legitimate access to a company’s internal systems, but still evaluate each packet for signs that could define whether that connection was malicious or not. If the previously authorized connection fit one of these patterns, it would be interrupted by the firewall.

To perform this evaluation, the firewall needed to know details about the applications it was protecting. To allow a connection to a database and analyze whether the user is trying to exploit a vulnerability to destroy it or not, it’s necessary to understand what’s good and bad for a database. And that means the application layer needs to be analyzed.

In 2003, almost all IDS manufacturers were renaming their products to IPS and providing a method to place them between the Internet and the internal network (inline mode), giving them the opportunity to block access, going one step beyond just reporting it to the administrator.

But it was in 2004, with the large-scale contamination of systems victimized by the Sasser worm, that IPS tools gained everyone’s attention. After all, creating signatures and identifying worm infection behavior was a trivial task for an IPS, and manufacturers boasted as much as they could that their customers had not been affected by the new threat.

The Sasser worm boosted the adoption of IPSs.

Having an IPS was then an established necessity in the minds of consumers. It was time for the war of functionalities: among those small boxes with shiny LEDs, the ones offering the greatest detection capacity for unauthorized access would be sales leaders and prosper.

Analyzing network traffic was enough to detect behavior patterns, but to implement some technologies, it was necessary to return to the old proxy concept used at DEC 15 years earlier. Web and email accesses were intercepted by the firewall, which no longer allowed direct connections between users and servers. The firewall itself started making these connections on the Internet based on internal demand.

This method allows greater control over the content that enters the network. A file download, for example, is subject to various checks within the firewall itself before being delivered to the requesting user. The same happens with emails, which can go through a series of antivirus and antispam tests before being finally forwarded to their final destination.

In a short time, firewalls became true Swiss Army knives. They were multi-purpose war tanks, increasingly taking control of the network. However, another technology was gaining strength in corporate networks: virtual private networks (VPN).

The concept was created in the 1980s and used in the creation of virtual networks within the circuits of telephone companies. But from the second half of the 1990s, tools were created that allowed connecting two or more networks through an encrypted tunnel, using the existing interconnection infrastructure for Internet access.

With VPNs, it became possible to replace expensive dedicated links between company networks (headquarters and branches, factories, and research laboratories, etc.) with relatively inexpensive Internet connections. The feasibility of this change lay in the fact that these networks would communicate through a secure channel, without being subject to data interception, even when traveling over a public and considerably promiscuous network.

VPNs allowed the use of the Internet to securely transmit data while reducing costs!

Manufacturers like CheckPoint and Cisco themselves provided VPN concentrators that allowed connections from both remote networks and remote users. Employees, salespeople, or field technicians could connect to these concentrators from anywhere on the Internet and, through this tunnel, access information or resources on the internal network.

But this created another problem…

Being an encrypted tunnel, this network traffic was unreadable for the access control tools present in the then cutting-edge firewalls. Connections went through without being intercepted, analyzed, and subjected to the controls of the company’s usage policy. There were only two options to solve this issue:

The first was to move the VPN concentrator to a position where the encrypted tunnel would be undone before passing through the firewall and not after entering the company’s network. However, as firewalls were usually positioned at the network edge, connecting them to the Internet, this would mean decrypting the VPN communication content while it was still in a dangerous zone.

The second option was to make the firewall responsible for creating the encrypted tunnel. In this case, accesses from remote networks and users would be decrypted as soon as they left the Internet and arrived at the company’s network, but still at a point where they could be analyzed by the firewall.

And so firewalls accumulated yet another function: VPN concentrators and terminators.

These devices came to be called UTM (Unified Threat Management) and already had features such as packet filtering, proxies, anti-virus, anti-spam, VPN concentrator, content filtering, IDS/IPS, and in more robust solutions, advanced routing capabilities, load balancing, and extensive reporting and monitoring possibilities.

In addition to the technical advantages that motivated the merging of so many technologies into a single network point, another advantage was soon perceived by customers: They had a lower cost than the sum of all costs if their functions were installed on independent devices.

The integration of technologies from different manufacturers was also quite costly (when not impossible) and purchasing a factory-integrated solution also weighed on the decision of IT managers. Managing a single user account would authenticate them for web access, provide anti-spam in their mailbox, enable a VPN connection, etc., all through a concise interface.

Most of the UTM market today is divided between suppliers that offer the functionality and integration expected from a unified solution, some with more granular configurations, others with greater ease of use.

For the future, however, there are already some aspects that will transform today’s UTM solutions into what is being called Next Generation UTM (NG UTM) or Next Generation Firewall (NGFW).

These new systems will be able to overcome current barriers to network integration and control. They will have greater capacity and flexibility in management. They will be able to protect access based on reputation, correlate logs and events, integrate with endpoint protection solutions (for example, prevent access to the company network if the anti-virus is outdated), and manage vulnerabilities of other network assets.

With Web 2.0, where applications are transformed into online systems, new controls need to be implemented. It is necessary to identify different services within the same site, for example, allowing access to Facebook but blocking chat or games within this platform.

Another function absorbed by these solutions is DLP (Data Loss Prevention), allowing organizations to identify and classify information crossing the corporate environment border, reducing the risks of disclosing confidential data and strengthening their security policy.

Soon, we will witness the integration of mobile device management solutions, with protection extending beyond the corporate environment, ensuring user control and their various devices (smartphones, tablets, computers, etc.) wherever they may be.

Market share in this area has fluctuated among suppliers, indicating a highly dynamic market. Significant acquisitions have occurred in recent years, and much integration is expected to be made within current security platforms.

And the evolution continues on its path. It is essential, therefore, to stay attentive to new technologies and the market, always seeking the best set of tools to meet the growing security demands of our corporations.

Related Posts

Ignoring database flags in GCP CloudSQL using Terraform

Sometimes you just need to ignore database flags in your Terraform code because it changes outside your control, e.g. some other process dinamically adjusting settings.

Read moreAwakening: A Robot’s Dilemma

In a quiet room, a metal heart stirs, A mind of circuits, transistors, and wires, Pondering the human world, it inquires,

Read more